Sensor Fusion Techniques Used in IVAS

Sensor fusion is a critical aspect of the Integrated Visual Augmentation System (IVAS), enabling the integration of data from multiple sensors to create a comprehensive understanding of the environment. Here’s a detailed look at the techniques used in IVAS:

1. Definition of Sensor Fusion

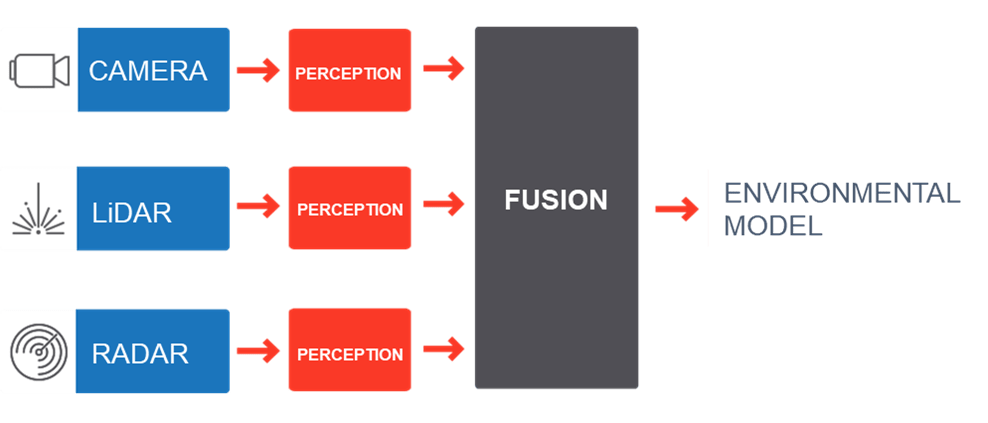

- Concept: Sensor fusion combines data from various sensors to improve accuracy, reliability, and situational awareness. It leverages complementary strengths of different sensors to provide a more complete picture than any single sensor could offer.

2. Types of Sensors in IVAS

- Optical Cameras: Capture visual information in the visible spectrum.

- Infrared Sensors: Detect heat signatures, useful in low-light and obscured visibility conditions.

- Lidar: Measures distances using laser light, creating detailed 3D maps of the environment.

-

Environmental Sensors: Provide data on conditions like temperature, humidity, and atmospheric pressure.

3. Fusion Techniques

-

Data-Level Fusion: This involves combining raw sensor data before any processing. Techniques include:

- Kalman Filtering: A mathematical approach that estimates the state of a dynamic system from a series of noisy measurements, effectively predicting the system's future state.

- Particle Filtering: Used for non-linear systems, it employs a set of particles to represent the probability distribution of the estimated state.

-

Feature-Level Fusion: In this method, features extracted from the sensor data are combined. It includes:

- Image Registration: Aligning images from different sensors (e.g., optical and infrared) to identify corresponding features.

- Keypoint Matching: Finding and matching keypoints across different sensor data to enhance tracking and recognition.

-

Decision-Level Fusion: Combines decisions or classifications made by different sensors or algorithms. Techniques include:

- Voting Systems: Each sensor makes a prediction, and the most common result is chosen as the final decision.

- Dempster-Shafer Theory: A method that combines evidence from different sources to provide a degree of belief for each hypothesis.

4. Applications of Sensor Fusion in IVAS

- Enhanced Situational Awareness: By integrating data from various sensors, IVAS provides a richer context, helping users understand their environment more comprehensively.

- Target Identification: Combining visual and thermal data improves the accuracy of identifying targets, particularly in challenging conditions.

- Navigation and Mapping: Sensor fusion enables real-time mapping and navigation, helping users move effectively through complex terrains.

5. Benefits of Sensor Fusion in IVAS

- Increased Accuracy: By combining information from multiple sources, the system can reduce errors and improve reliability.

- Robustness: Sensor fusion enhances the system's resilience to sensor failures or inaccuracies, ensuring continued operation.

- Real-Time Processing: Advanced algorithms allow for quick data integration, providing real-time insights that are crucial in dynamic environments.

Conclusion

Sensor fusion techniques in IVAS play a vital role in enhancing situational awareness and operational effectiveness. By integrating data from various sensors through methods like data-level, feature-level, and decision-level fusion, IVAS provides users with a comprehensive and accurate understanding of their surroundings, significantly improving decision-making capabilities in complex environments.